深度学习 | EECS 498-007 / 598-005 | Image Classification

Robustness 鲁棒性

Edge images

Image Classification:

input: image

output: Assign image to one of a fixed set of categories.

Problem: Semantic Gap(语义差距)

Object Detection:

One way to perform is via image classification of different sliding windows im the image. In a nutshell, it means classify different sub-regions of the image so we could look at s sub region over here and then classify it as background, horse, person, car or a truck…

1 | def classify_image(image): |

Machine Learning:Data-Driven Approach

- Collect a dataset of images and labels

- Use Machine Learning to train a classifier

- Evaluate the classifier on new images

1

2

3

4def train(images, labels):

# Machine learning

# Memorize all data and labels

return model

1 | def predict(model, test_images): |

Distance Metric to compare images

曼哈顿距离:

L1(Manhattan) distance: $d_1(I_1,I_2) = \sum_p|I_1^p-I_2^p|$

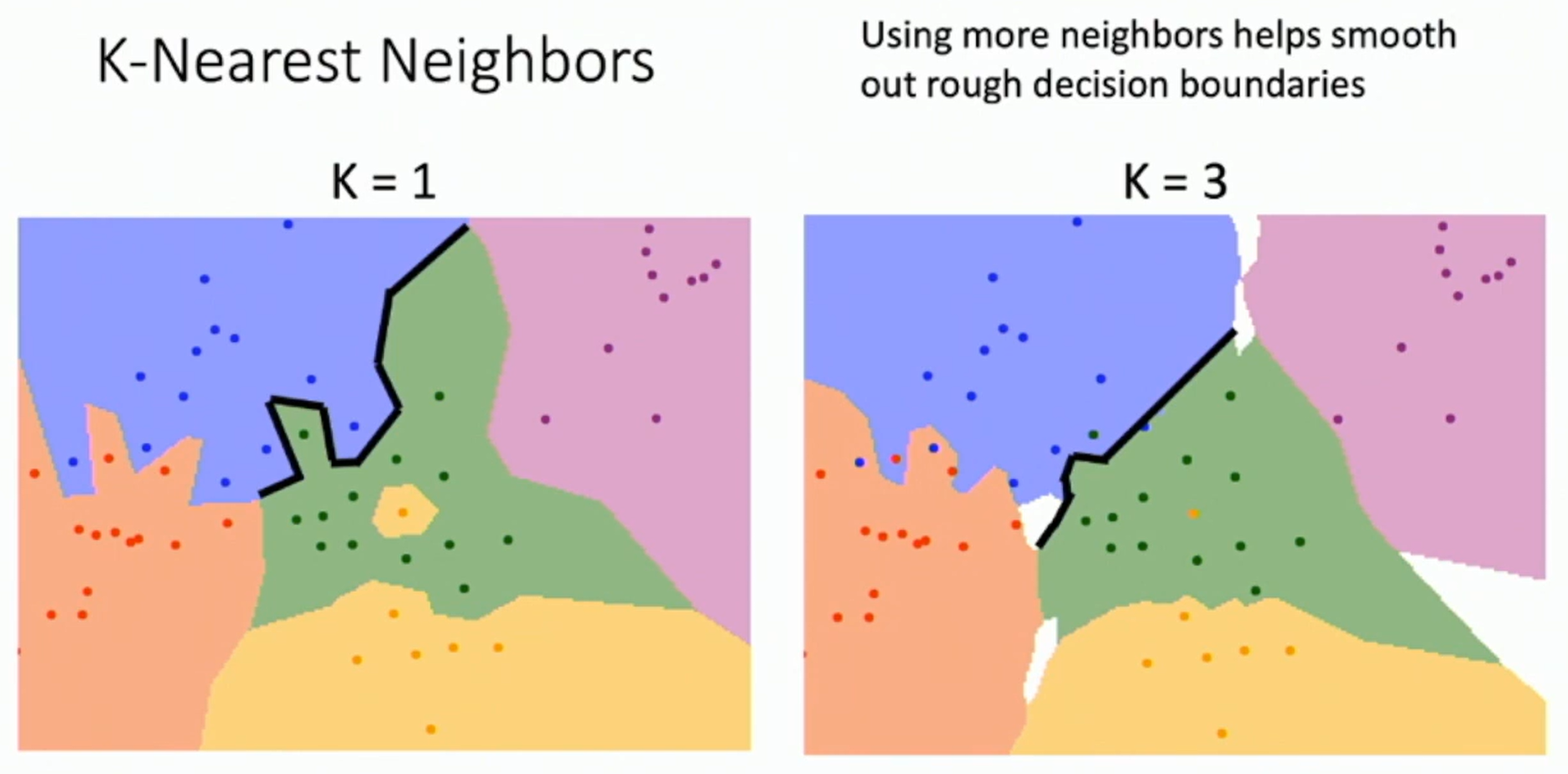

Nearest Neighbor Decision Boundaries

Decision Boundarie is the boundary between two classification regions.

Decision Boundarie can be nosiy; affected by outliers.

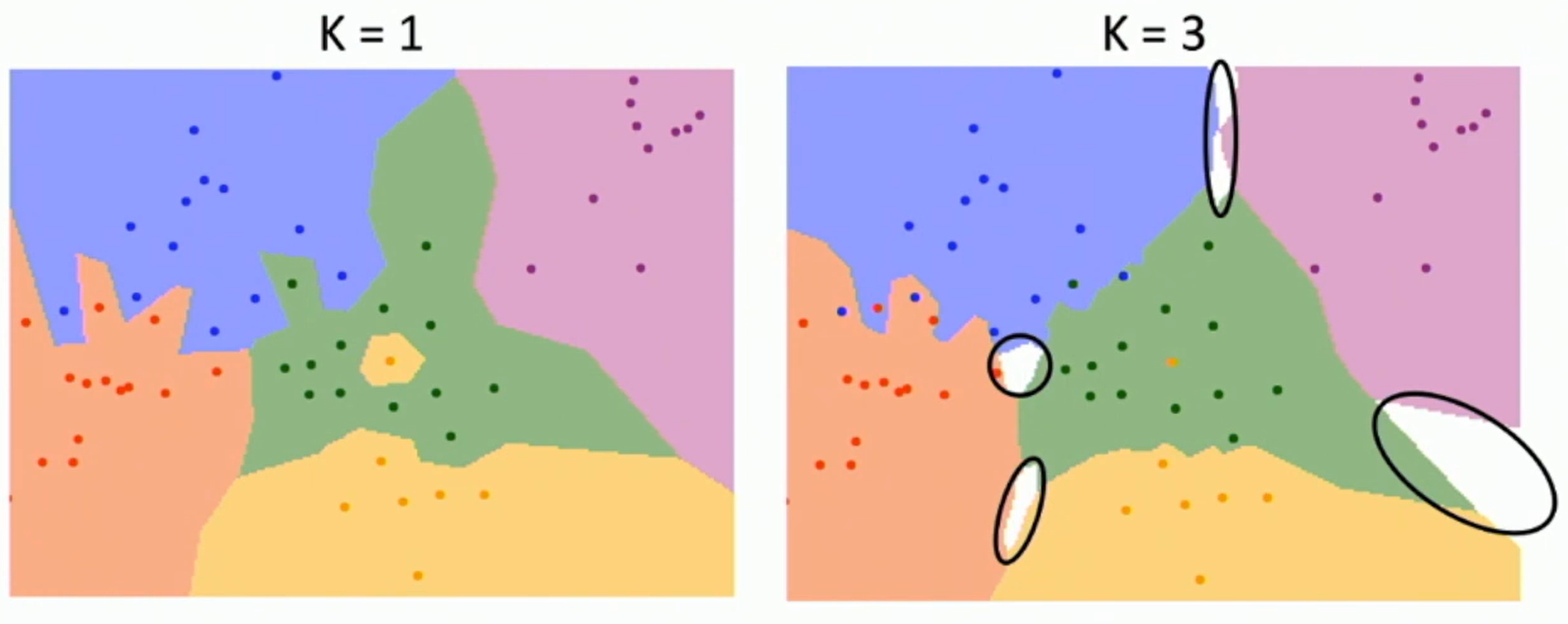

K-Nearest Neighbors

Using more neighbors helps smooth out rough decision boundaries.

Using more neighbors helps reduce the effect of outliers.

When K > 1 there can be ties between classes. Need to break somehow!

白色区域均有三个最近的邻居。

欧几里得距离

L2(Euclidean) distance = $d_2(I_1, I_2) = \sqrt{\sum_{p}(I_1^p - I_2^p) ^ 2}$

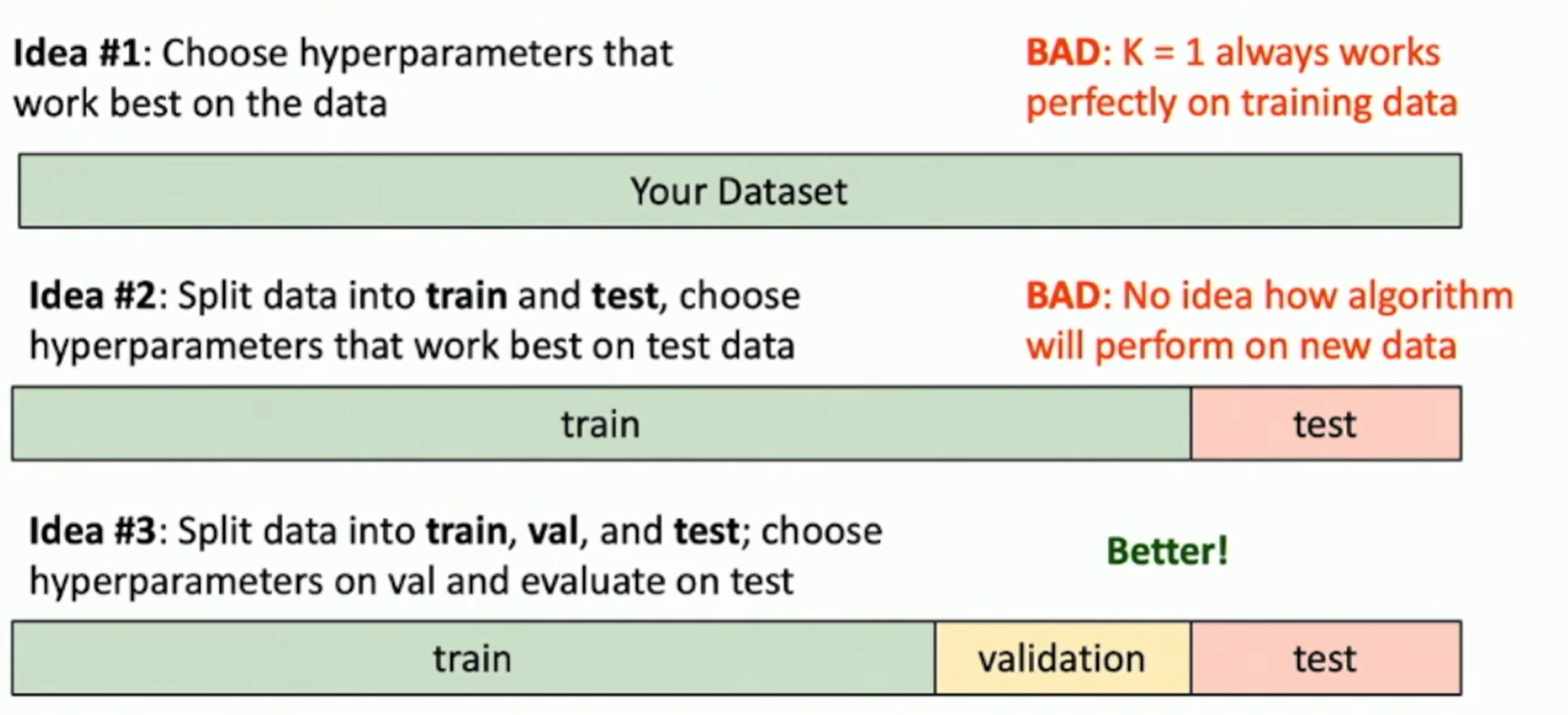

Setting Hyperparameters

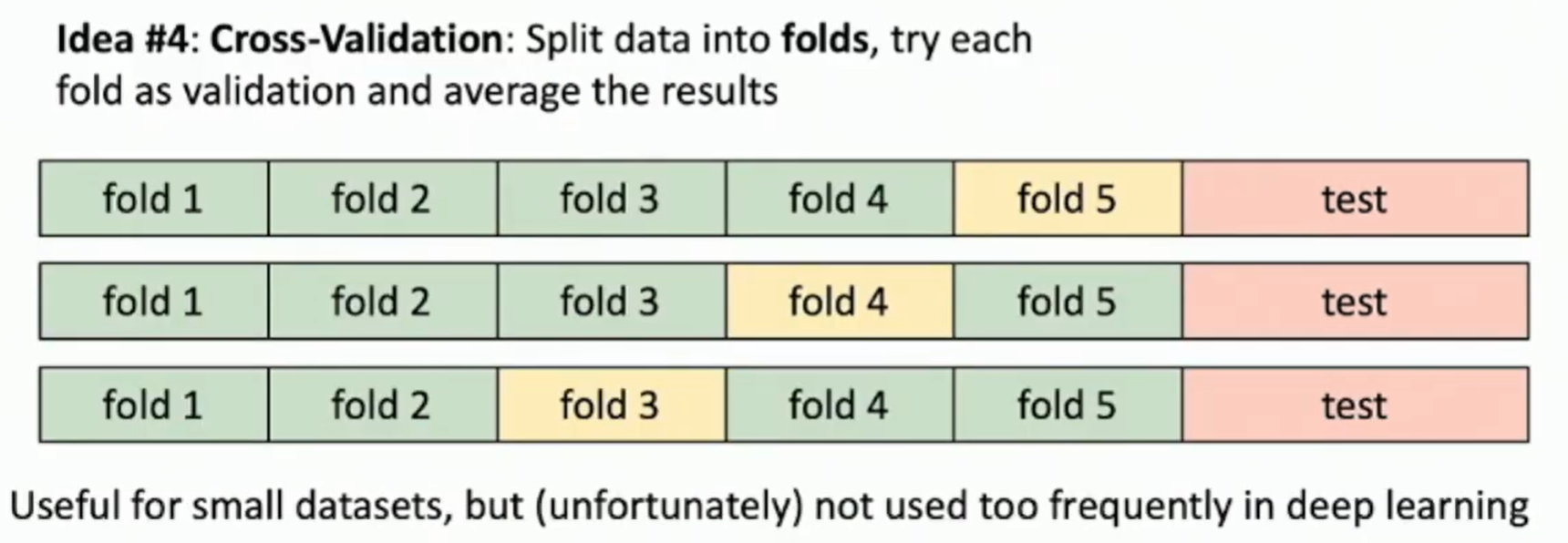

Among the three ideas above, The best idea is Idea #3

当然,我们也可以将数据集划分为更多,以此更好估计我们的泛化性能。

也就是交叉验证

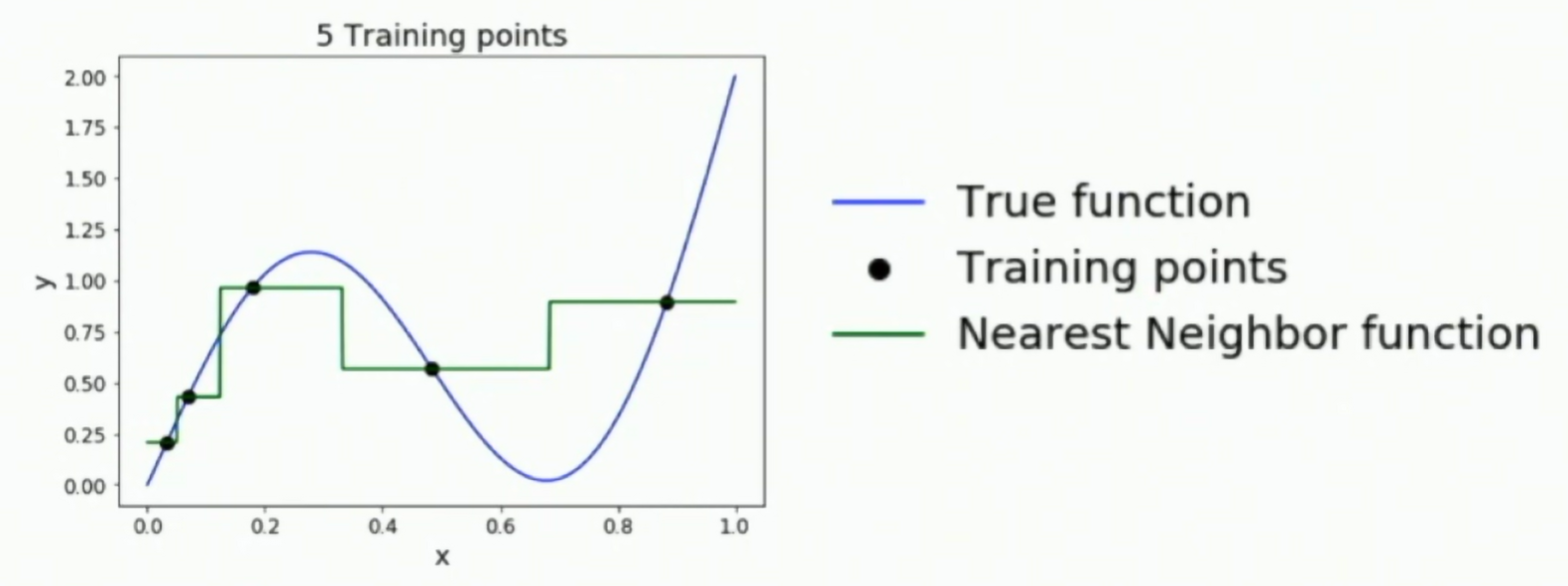

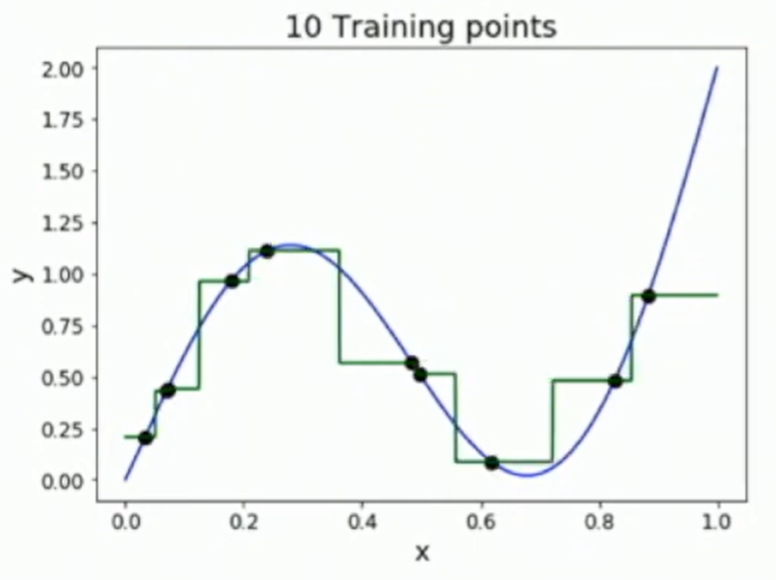

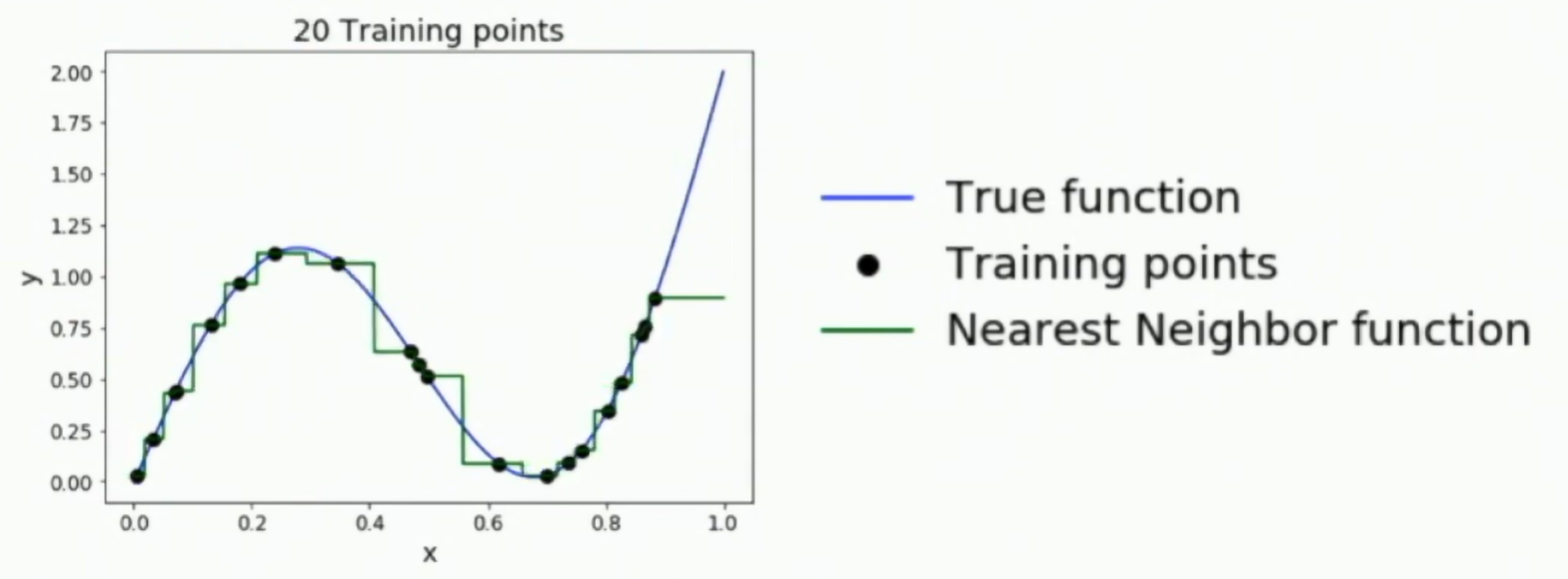

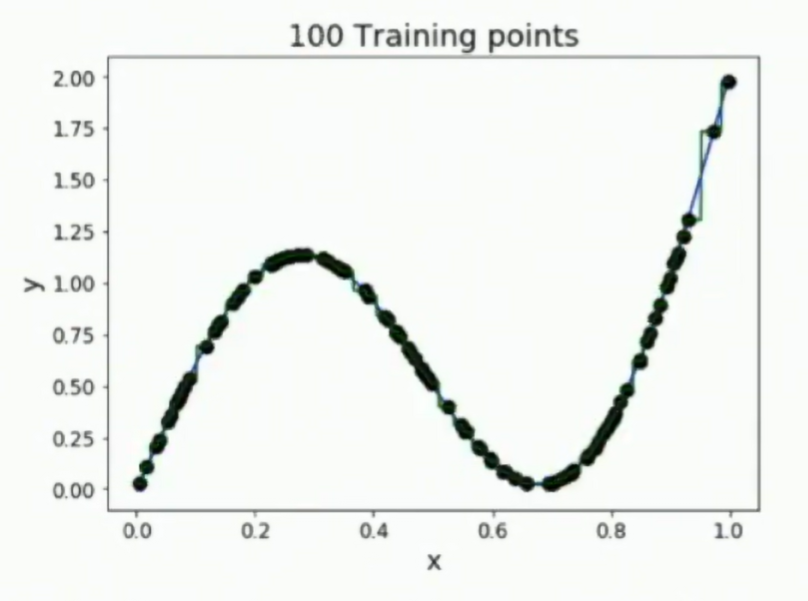

随着训练的数量越来越多

We can see the nearest neighbor classifier performs well.